Artificial Intelligence (AI) is indeed moving tremendously. Its prospects have left

several upset, for it’s assumed that job automation portends nice danger to humanity. Arguing concerning the believably or otherwise of this claim is but not the intent of this text. Noteworthy, once mention is formed of AI, the average non-technical person thinks about high-end robots. This is but associate degree oversimplification of a rather broad thought.

It’s safe to mention AI could be a Brobdingnagian tree comprising varied however interlinking branches, among which include Natural Language Processing (NLP) and Machine Learning (ML). So, whereas self-driving cars ar AI applications, so is Siri on your iPhone as well as Youtube’s video recommendations. Being barely dis sociable from knowledge science, ML has particularly gained much attention in the business world. But what is it all about?

What’s Machine Learning?

One of the foremost fashionable definitions of machine learning was given by Arthur Samuel in 1959, which considered it a sub field of Computer Science that gives “computers the ability to learn while not being expressly programmed.” This is apt but shouldn’t be taken to mean ML systems are built without any programming effort, or that they acquire knowledge on their own from scratch.

Instead, milliliter systems ar created to make upon already nonheritable data. This makes them perform approach higher than programs designed with hardcoded rules. For example, besides being a pain, a program designed to detect cats in pictures would be quite ineffective if built by a programmer who manually defined the features of various cat species. Such a program would possible miscarry once round-faced with factors (eg: obstruction, reflections and presence of unaccounted features) that distort pictures and defy pre-determined rules. Using machine learning, all that’s needed is to accumulate massive datasets (tons of cats’ footage, within the on top of case) to coach a model, then optimize results so a program will turn out the simplest results once round-faced with completely new sets of knowledge.

In this case, such program is taken into account to be ‘learning’ from knowledge. There are 2 major varieties of issues ML Engineers attempt to solve: regression and classification issues. For the sake of simplicity, I won’t go in-depth into these or denote any milliliter algorithmic program. But the purpose is: you create use of ML merchandise daily, maybe while not realizing it.

When looking out Google, you’re interacting with associate degree algorithmic program that has learned (and continues to learn) a way to rank search results supported what’s thought-about to be most vital to your question. Facebook uses ML to suggest new friends to you, Netflix’s movies recommendation feature is built on top of it, Quora uses it to determine the type of questions you’d like to read about, just to mention a few examples.

Why You Should Learn Machine Learning

It’s a big deal: Machine Learning is the rave of the moment. Tons of companies are going all out to hire competent engineers, as ML is gradually becoming the brain behind business intelligence. Through it, businesses ar ready to master consumers’ preferences thereby increasing profits. In 2006, Netflix announced a prize of $1 million to the first person to improve the accuracy of its recommendation system by 10%. The prize is proof of the connection placed on ML and Netflix’s anticipation of considerable profits through a small improvement within the accuracy of its recommendations. It’s closely linked to data science: Just as humans learn from experience, ML systems learn from data. Thus, several ML engineers ar created to wear 2 hats (machine learning engineering and knowledge science) whereas endeavor their daily work , which is arguably a good thing. Recommended for You Webcast, March 13th: a way to Activate High-Value Customers investment language process and Machine Learning As you most likely apprehend, data science is rated as the sexiest job of the 21st century. Learning ML would make you more knowledgeable in data science and thus more attractive in the labor market.

To become unwary of the hazards of AI: several things are same concerning AI and whether or not or not it might very snatch jobs. Fortunately, however, data of machine learning might take you a step towards protection from any foreseen dangerous outcome of mass scale AI implementation, because, as of these days, most systems are built by humans. Also, there’s likely to be a positive demand of engineers, come what may.

How to Get Started

Gone are the days when ML knowledge used to be an exclusive preserve of Ph.D. researchers and students. Today, you can teach yourself ML without needing to enroll in a University – although a formal education may be quite beneficial. If you aren’t cut out for higher degrees, here are some useful tips to get started with ML.

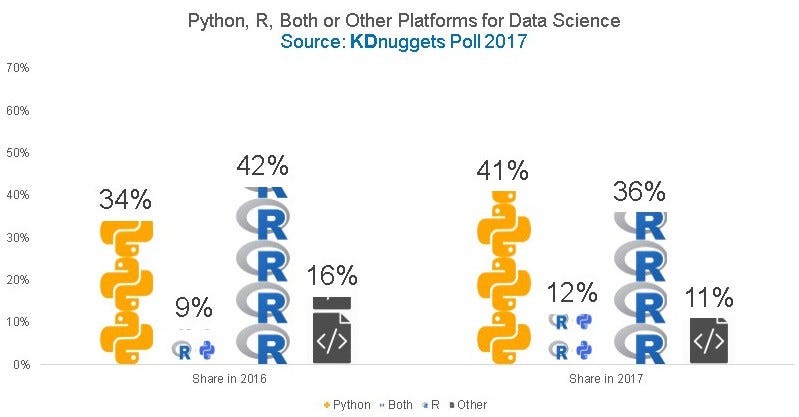

Learn a Programming Language: You NEED to have some programming knowledge under your belt to get started. Python comes in handy, because it’s used in many machine learning projects due to its possession of tons of data science libraries. It’s also relatively easy to learn and comprehend.

Get a high-end PC: Chances are you’d make use of only small data sets when starting out. But as time passes by, you might want to delve into more complex projects. To get the best of the learning experience, you should ensure that your PC satisfies certain requirements, including possessing a good enough Random Access Memory (RAM) and storage. Also to play with Deep Learning (an ML algorithm), you’d need high-quality Graphical Processing Units (GPUs).

Learn the prerequisites: Machine Learning draws a lot from three areas in Mathematics: Statistics, Linear Algebra and Calculus. If you aren’t comfortable with Math, don’t fret. Many of the things you actually need to learn in order to get started, are quite basic.

Read ML Academic Papers: Many ML papers are published regularly, and reading tons of them is a good way to learn new things and keep up with the pace of ML research.

Read ML Academic Papers: Many ML papers are published regularly, and reading tons of them is a good way to learn new things and keep up with the pace of ML research.

Learn from Videos: YouTube is your friend.

Read Blogs and Follow Online Communities: Follow blogs and online communities that can help fast track the learning process. Reddit’s machine learning channel is a good example of the latter.

Practice: Practice makes perfect, they say. So, try your hands at machine learning projects and participate in contests hosted on Kaggle and similar sites.

If you’re forestall to supercharging your career, learning Machine learning may well be the thanks to go.

#Hadoop #BigData #BigDataAnalytics #developers #bigdatatraining #etlhive #Analytics #DataAnalytics #DataScience #DataScientist #DataScientists #DeepLearning #hive #Cloudera #Pig #SQOOP #Scala #Storm #python #programming #oops #development #training #AI #ArtificialIntelligence #Pune #MachineLearning #democlassess #demosession #python